Managing data used to be about storage and backups. Today, it is about speed and reliability. We have moved from static databases to high-speed “data rivers.” If you are a software engineer or a manager today, you know the pain: broken pipelines, “dirty” data, and manual fixes that take all weekend.

This guide is designed for professionals in India and across the globe who want to stop fighting with data and start mastering it. Whether you are a Data Engineer, a DevOps specialist, or a Lead Manager, the DataOps Certified Professional (DOCP) program is the bridge between manual data work and automated excellence.

What is DataOps?

DataOps isn’t just a set of tools; it’s a mindset. It takes the best parts of DevOps—automation, testing, and collaboration—and applies them to the world of data engineering. The goal is simple: deliver high-quality data to users as fast as possible without breaking the system.

Deep Dive: DataOps Certified Professional (DOCP)

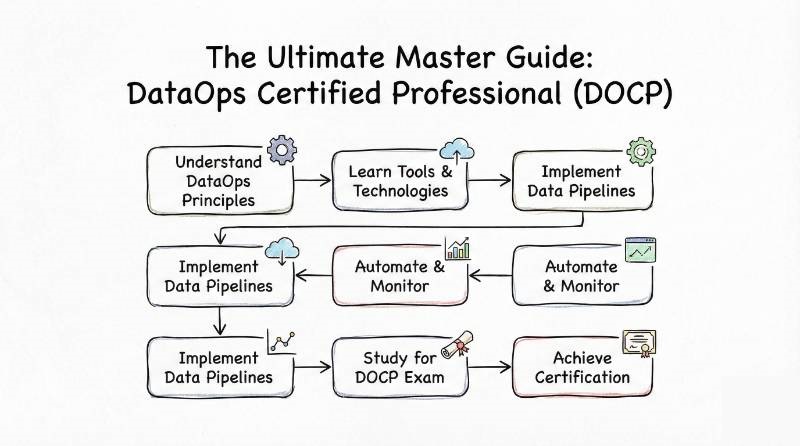

The DataOps Certified Professional (DOCP) is a specialized certification program that validates your ability to manage the entire data lifecycle using modern automation.

What it is

This certification is a professional-level credential that focuses on building and managing automated data pipelines. It covers the DataOps Manifesto, agile data development, and the technical stack required to ensure data quality and observability at scale.

Who should take it

- Software Engineers looking to move into data platform engineering.

- Data Engineers who want to automate manual ETL (Extract, Transform, Load) tasks.

- Managers overseeing data transformation projects who need to understand the technical “why” behind DataOps.

- SREs who are now being asked to manage data reliability.

Skills you’ll gain

- Automated Pipeline Design: Learn to build “Data-as-Code” using CI/CD principles.

- Orchestration Mastery: Use tools like Apache Airflow and Dagster to manage complex workflows.

- Data Quality Testing: Implement automated “circuit breakers” to stop bad data before it hits production.

- Containerization for Data: Run heavy data workloads inside Docker and Kubernetes.

- Observability: Set up real-time monitoring for data drift, pipeline health, and system performance.

Real-world projects you should be able to do

- Automated ETL Pipeline: Design a system that automatically extracts data from various sources, transforms it, and loads it into a cloud warehouse whenever new data arrives.

- Self-Healing Data Streams: Build a pipeline using Kafka and Spark that detects schema changes and alerts the team automatically.

- Data Quality Dashboard: Create a live Grafana dashboard that tracks the “health score” of your data in real-time.

Preparation plan

- 7–14 Days (The Expert Sprint): Focus on the DataOps Manifesto and hands-on tool integration. This is for those already working with data tools who just need to learn the “Ops” side.

- 30 Days (The Professional Path): Spend 1 hour a day. Dedicate the first two weeks to theory and the last two weeks to building the real-world projects in the lab.

- 60 Days (The Deep Dive): Ideal for beginners or career switchers. Take it slow—one tool at a time (NiFi, then Kafka, then Airflow).

Common mistakes

- Ignoring the Culture: Thinking DataOps is only about tools. It’s about how teams talk to each other.

- Testing Too Late: Waiting until the end of the pipeline to check data quality. You must test at every stage.

- Over-Engineering: Trying to automate everything on day one. Start with the most frequent manual tasks.

Best next certification after this

After mastering DataOps, the most logical step is either MLOps Certified Professional (to manage AI models) or FinOps Certified Professional (to manage the high cost of cloud data).

Master Comparison Table: Professional Certifications

| Track | Level | Who it’s for | Prerequisites | Skills Covered | Recommended Order |

| DevOps | Professional | Engineers | Basic Linux/Git | CI/CD, Docker, Jenkins | 1st |

| DataOps | Professional | Data Engineers | Basic SQL/Python | Airflow, Kafka, Quality | 2nd |

| DevSecOps | Professional | Security Eng. | DevOps Basics | SAST/DAST, Compliance | 2nd |

| SRE | Professional | Reliability Eng. | System Admin | SLOs, SLIs, Error Budgets | 3rd |

| MLOps | Professional | AI/ML Eng. | Python/ML Models | Model Versioning, Drift | 3rd |

| FinOps | Professional | Cloud Admins | Cloud Basics | Cost Optimization, Billing | 4th |

Choose Your Path: 6 Learning Journeys

Every career is unique. Depending on your interest, you should follow a specific sequence of training.

1. The DevOps Path (The Root)

This is for those who love building the “pipes.” You focus on continuous integration and deployment. It is the foundation for everything else.

2. The DevSecOps Path (The Shield)

For those who believe security is a feature, not a hurdle. You learn how to bake security into the code from day one.

3. The SRE Path (The Stabilizer)

If you are obsessed with “zero downtime,” this is for you. You use software engineering to solve operations problems and keep the lights on.

4. The AIOps/MLOps Path (The Brain)

As AI becomes the norm, we need ways to manage machine learning models like software. This path teaches you how to version and deploy AI at scale.

5. The DataOps Path (The Streamliner)

Your goal is to make data move quickly and accurately. You bridge the gap between “messy data” and “business insights.”

6. The FinOps Path (The Optimizer)

Cloud bills can be scary. This path is for those who want to bridge engineering and finance to ensure every cloud dollar is spent wisely.

Role → Recommended Certifications Mapping

- DevOps Engineer: DevOps Certified Professional → DevSecOps Certified Professional.

- SRE: SRE Certified Professional → Master in Observability Engineering.

- Platform Engineer: Kubernetes Certified Administrator → DevOps Certified Professional.

- Cloud Engineer: AWS/Azure Solutions Architect → FinOps Certified Professional.

- Security Engineer: DevSecOps Certified Professional → Certified Kubernetes Security Specialist.

- Data Engineer: DataOps Certified Professional → MLOps Certified Professional.

- FinOps Practitioner: FinOps Certified Professional → Cloud Economics Certification.

- Engineering Manager: Certified DevOps Manager → FinOps Certified Professional.

Next Certifications to Take

Once you complete your DataOps training, you shouldn’t stop. Here are three directions to grow:

- Same Track (Expertise): Move to Certified DataOps Architect. This allows you to design high-level data strategies for large enterprises.

- Cross-Track (Versatility): Take the MLOps Certified Professional. Since you already know how to move data, learning how to manage the models that use that data makes you indispensable.

- Leadership (Growth): Pursue the Certified DevOps Manager. This helps you move from being the person “doing the work” to the person “leading the team.”

Top Institutions for DataOps Training

Choosing where to learn is as important as what you learn. These institutions are known for their hands-on labs and industry-standard certifications.

- DevOpsSchool: As a global leader in technical training, they offer deep, tool-centric courses with lifetime access to learning materials. Their curriculum is heavily focused on real-world projects and hands-on labs.

- Cotocus: This institution is widely known for its boutique training style and high-quality lab environments. They specialize in simulating complex enterprise challenges to prepare students for real-world scenarios.

- Scmgalaxy: This is a massive community-driven platform that provides extensive resources and tutorials. In addition to training, they offer significant support for SCM and DevOps professionals worldwide.

- BestDevOps: They specialize in intensive bootcamps designed to take an engineer from a beginner to an expert in a short timeframe. Their focus is on practical, job-ready skills that can be applied immediately.

- DataOpsSchool: This is a specialized branch that focuses exclusively on the data lifecycle. They offer niche courses in data governance and advanced pipeline automation that are hard to find elsewhere.

- dataopsschool: A niche platform focusing exclusively on the DataOps lifecycle, providing the deepest possible technical dive into data orchestration tools.

- finopsschool: Provides the financial and cloud-economic training necessary to ensure that your DataOps pipelines are cost-effective as they scale.

FAQs: Career & Value

1. Is the DataOps certification difficult?

It is moderately challenging. You need to understand both data logic and automation pipelines. It is not about memorizing; it’s about solving problems.

2. How long does it take to get certified?

If you are dedicated, you can complete the course and pass the exam in 30 to 45 days.

3. What are the prerequisites?

A basic understanding of SQL and a little bit of Linux/Git is helpful, but the program starts with the fundamentals.

4. Do I need to know how to code?

Basic Python or Shell scripting is beneficial, as DataOps involves writing “pipelines as code.”

5. What is the sequence I should follow?

Start with DataOps Foundation, move to DataOps Certified Professional, and eventually DataOps Architect.

6. Will this increase my salary?

Yes. DataOps is one of the highest-paying niches in the tech industry right now because the talent pool is small.

7. Is this certification recognized globally?

Yes, DevOpsSchool certifications are recognized by major tech firms across India, the US, and Europe.

8. What is the value of DataOps for a Manager?

It helps you understand why projects are delayed and how to build a team that can deliver data faster with fewer errors.

9. Can I switch from a non-tech role to DataOps?

It is possible, but you should start with the “DevOps Foundation” first to get comfortable with the technical environment.

10. How is DataOps different from Data Engineering?

Data Engineering builds the pipeline; DataOps makes the pipeline reliable, automated, and scalable.

11. What tools will I learn?

You will work with Apache Airflow, Kafka, NiFi, Jenkins, and Kubernetes, among others.

12. What are the career outcomes?

You can qualify for roles like DataOps Engineer, Platform Engineer, and Senior Data Architect.

FAQs: DataOps Certified Professional (DOCP) Specifics

1. Does the DOCP include hands-on labs?

Yes. The program is heavily lab-based. You will build actual pipelines in a virtual environment.

2. What is the passing score for the exam?

Typically, you need a 70% or higher to earn the certification.

3. Are the classes live or recorded?

Both options are available. You can choose a live interactive batch or self-paced video learning.

4. Is there a project requirement?

Yes. To be certified, you must complete and submit a real-world project that demonstrates your mastery of the tools.

5. What if I miss a live class?

All live sessions are recorded and uploaded to your Learning Management System (LMS) for lifetime access.

6. Can I take the exam online?

Yes, the exam is conducted online through the DevOpsSchool portal.

7. Does the certification expire?

The certification is valid for a lifetime, though it is recommended to take “refresher” courses as tools evolve.

8. Is there job assistance?

Yes, DevOpsSchool provides interview kits, resume reviews, and access to their global alumni network.

Conclusion

The era of “manual data” is over. Companies no longer want to hear that a report is delayed because a pipeline broke. They want systems that are self-healing, automated, and secure.

Becoming a DataOps Certified Professional is about more than just adding a line to your resume. It is about becoming the person who brings order to the chaos of big data. Whether you are looking to boost your salary or simply want to stop the “weekend firefighting,” this certification is your roadmap to success.